News

-

Jan.2026: Three papers were accepted by ICLR 2026.

-

Sept.2025: Tongyi DeepResearch was released  , one paper was accepted by NeurIPS 2025 and selected as a spotlight presentation, and one paper was accepted by TMLR. , one paper was accepted by NeurIPS 2025 and selected as a spotlight presentation, and one paper was accepted by TMLR.

-

May.2025: One paper was accepted by ICML 2025.

-

Jan.2025: Three papers were accepted by ICLR 2025 and MMIE was selected as an oral presentation.

-

Dec.2024: Invited talk at Cohere For AI, one paper was accepted by COLING 2025, two papers were accepted by AAAI 2025.

-

Sept.2024: One paper was accepted by NeurIPS 2024 and one paper was accepted by EMNLP 2024.

-

Jul.2024: One paper was accepted by ECCV 2024.

-

Jun.2024: Two papers were accepted by MICCAI 2024 and one was early accepted.

-

Sept.2023: One paper was accepted by NeurIPS 2023.

-

Aug.2022: Share paper list about multi-modal learning in medical imaging.

|

WebWatcher: Breaking New Frontiers of Vision-Language Deep Research Agent

Xinyu Geng*, Peng Xia*, Zhen Zhang*, Xinyu Wang, Qiuchen Wang, Ruixue Ding, Chenxi Wang, Jialong Wu, Yida Zhao, Kuan Li, Yong Jiang, Pengjun Xie, Fei Huang, Jingren Zhou

International Conference on Learning Representations (ICLR), 2026.

[Paper]

[Code]

|

MMedAgent-RL: Optimizing Multi-Agent Collaboration for Multimodal Medical Reasoning

Peng Xia, Jinglu Wang, Yibo Peng, Kaide Zeng, Xian Wu, Xiangru Tang, Hongtu Zhu, Yun Li, Yan Lu, Huaxiu Yao

International Conference on Learning Representations (ICLR), 2026.

[Paper]

[Code]

|

MMIE: Massive Multimodal Interleaved Comprehension Benchmark For Large Vision-Language Models.

Peng Xia*, Siwei Han*, Shi Qiu*, Yiyang Zhou, Zhaoyang Wang, Wenhao Zheng, Zhaorun Chen, Chenhang Cui, Mingyu Ding, Linjie Li, Lijuan Wang, Huaxiu Yao

International Conference on Learning Representations (ICLR), 2025. (Oral)

[Paper]

[Code]

[Project Page]

|

AnyPrefer: An Automatic Framework for Preference Data Synthesis.

Yiyang Zhou*, Zhaoyang Wang*, Tianle Wang*, Shangyu Xing, Peng Xia, Bo Li, Kaiyuan Zheng, Zijian Zhang, Zhaorun Chen, Wenhao Zheng, Xuchao Zhang, Chetan Bansal, Weitong Zhang, Ying Wei, Mohit Bansal, Huaxiu Yao

International Conference on Learning Representations (ICLR), 2025.

[Paper]

|

CARES: A Comprehensive Benchmark of Trustworthiness in Medical Vision Language Models

Peng Xia, Ze Chen, Juanxi Tian, Yangrui Gong, Ruibo Hou, Yue Xu, Zhenbang Wu, Zhiyuan Fan, Yiyang Zhou, Kangyu Zhu, Wenhao Zheng, Zhaoyang Wang, Xiao Wang, Xuchao Zhang, Chetan Bansal, Marc Niethammer, Junzhou Huang, Hongtu Zhu, Yun Li, Jimeng Sun, Zongyuan Ge, Gang Li, James Zou, Huaxiu Yao

The Conference on Neural Information Processing Systems (NeurIPS), 2024

[Paper]

[Code]

[Project Page]

|

Invited Talks

-

Jan. 2026: MiroMind, Agent0 & Agent0-VL: Unleashing Self-Evolving Agents via Tool-Integrated Reasoning.

-

Jul. 2025: TechBeat, MMed-RAG: Versatile Multimodal RAG System for Medical Vision Language Models.

-

Apr. 2025: ICLR Oral Session, Massive Multimodal Interleaved Comprehension Benchmark For Large Vision-Language Models.

-

Dec. 2024: Cohere For AI, Reliable Multimodal RAG for Factuality in Medical Vision Language Models. [Video] [Cohere Event] [X/Twitter] [Linkedin]

-

Oct. 2024: School of Computer Science, Soochow University, Reliable Multimodal RAG in Medical Vision Language Models.

-

Oct. 2024: AI TIME, EMNLP Seminar, Reliable Multimodal RAG in Medical Vision Language Models.

-

Oct. 2024: NICE-NLP, EMNLP Seminar, Reliable Multimodal RAG in Medical Vision Language Models.

|

|

Press

-

"Tongyi Deep Research" was covered by 机器之心, 量子位, 新智元, IBM News, China Economic News, South China Morning Post, ForkLog, Apidog, Medium, Towards AI, MarkTechPost, VentureBeat, Geeky Gadgets, ASO World, AIBase.

-

"WebWatcher: Breaking New Frontiers of Vision-Language Deep Research Agent" was covered by 量子位, AK, So Essentially, BYCLOUD AI, Beehiiv, Medium Towards Dev, Daily AI Newsletter by Rohan.

-

"MMed-RAG: Versatile Multimodal RAG System for Medical Vision Language Models" was covered by Banff International Research Station, MarkTechPost, Moonlight Press, neptune.ai.

|

|

Selected Honors & Awards

-

ICDM UGHS 2025 Rising Star Award & Best Poster Award, 2025

-

NeurIPS Spotlight Presentation (Top 5%), 2025

-

Stars of Tomorrow Excellent Intern Award, Microsoft Research, 2025

-

KDD 2025 Health Day Distinguished Vision Award, 2025

-

ICLR Travel Award, 2025

-

ICLR Oral Presentation (Top 1.8%), 2025

-

Third Place, Shanghai-HK Interdisciplinary Shared Tasks (Task 1), 2022

-

Second Price, The 3rd Huawei DIGIX AI Algorithm Contest, 2021

|

|

Academic Services

-

Area Chair: ACL Rolling Review (ARR) (2025)

-

Conference Reviewer: NeurIPS (2024,2025), NeurIPS D&B Track (2024,2025), ICML (2024,2025,2026), ICLR (2025,2026), CVPR (2025,2026), ICCV (2025), ACL Rolling Review (ARR) (2024,2025,2026), ECCV (2026), MICCAI (2024,2025), WACV (2025,2026), AAAI (2026)

-

Journal Reviewer: IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), International Journal of Computer Vision (IJCV), IEEE Transactions on Medical Imaging (TMI), Cell Patterns, Knowledge-Based Systems (KBS), Expert Systems with Applications (ESWA), Pattern Recognition (PR)

-

Student Volunteer: EMNLP (2024)

-

Workshop Co-Organizer: ICML 2025 Workshop on Reliable and Responsible Foundation Models

|

Teaching

-

Teaching Assistant, DATA 110, School of Data Science and Society, UNC-Chapel Hill, 2026 Spring.

|

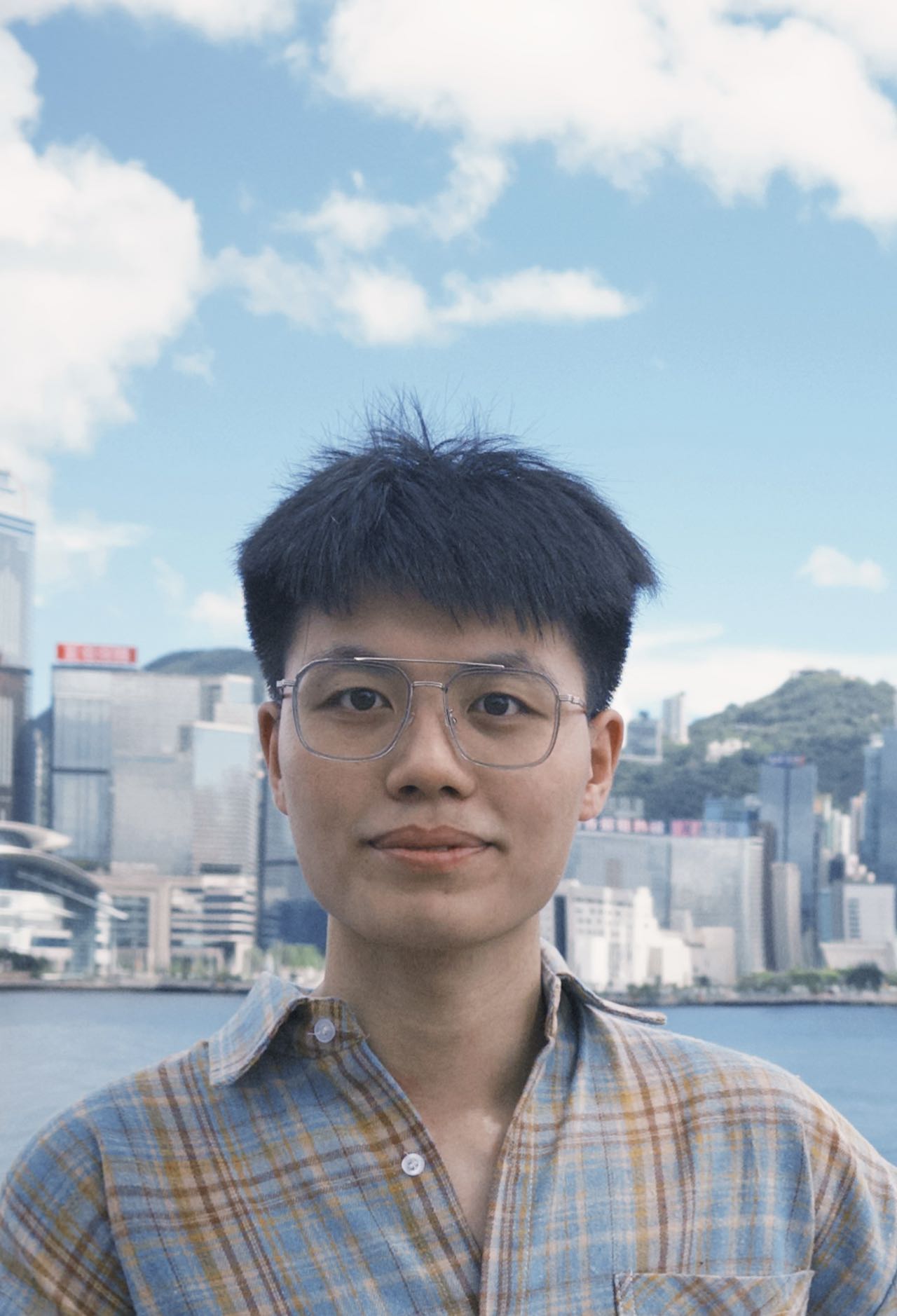

© Peng Xia | Last updated:

| |

Technical Report, 2025.

[

Technical Report, 2025.

[